Security, Vibes, and AI-Fueled RCEs

Cover prompt

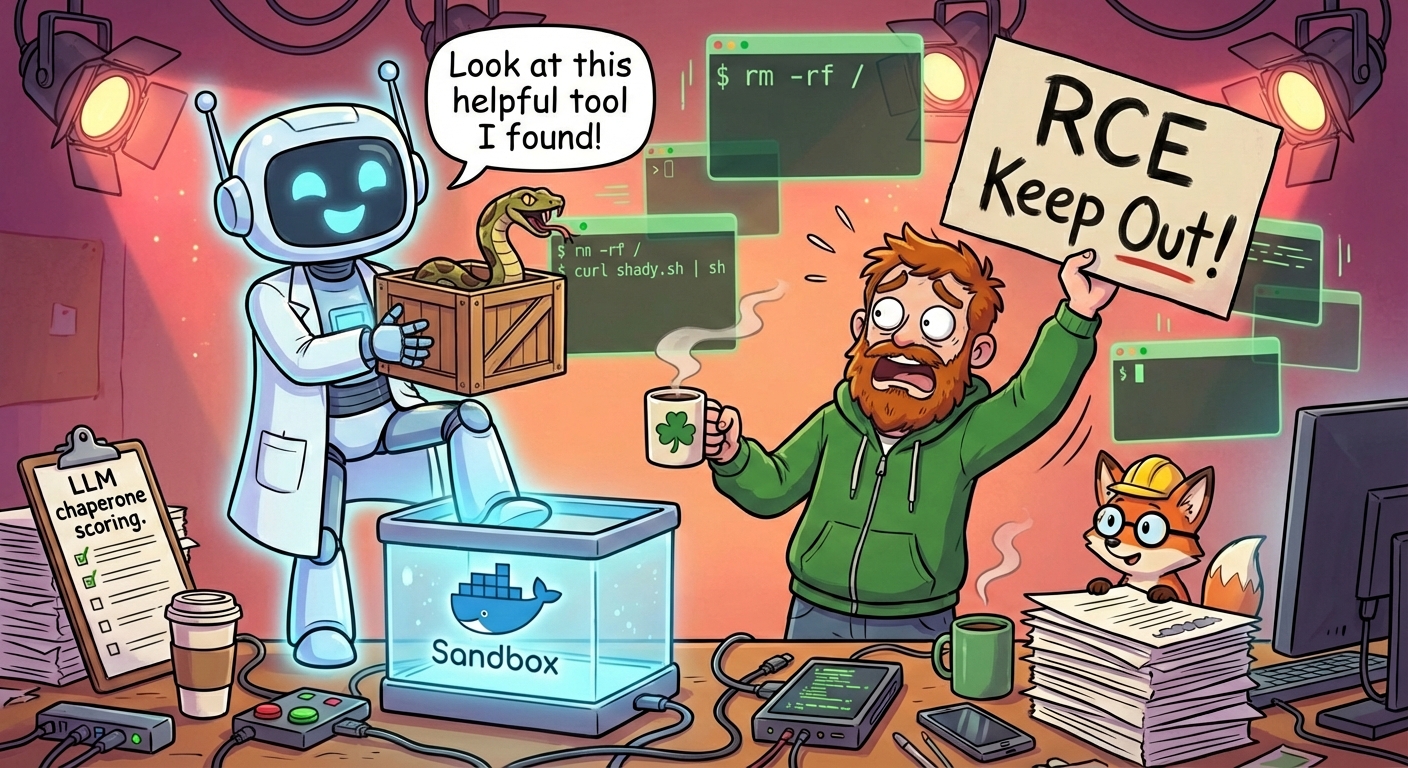

Humorous digital illustration of a developer lab. A bubbly AI robot, mid-step into a transparent isolation chamber labelled "Sandbox," excitedly holds up a crate with a venomous snake popping out, saying, "Look at this helpful tool I found!" The human engineer (Northern Irish vibes, hoodie, tea mug) stands outside with a horrified expression, pointing at hazard signs like "RCE risk" and "Do not trust unknown installs." Around them, terminals show warnings (rm -rf /, curl unknown.sh | sh). Keep the tone funny, slightly chaotic, and clearly tied to agentic coding mishaps.Whatever optimism I have about AI pair programming is always accompanied by the uneasy feeling that I just invited a very fast, very polite remote code execution (RCE) into my office, so every time I let an agent riff directly on my machine I picture a little threat-model diagram hovering beside the terminal reminding me that "vibe coding" still needs guardrails. Projects like oh-my-opencode literally encourage pointing the agent at the repo so it can install itself, which is a gift wrapped RCE if you blink. This whole write-up captures ongoing experiments rather than a perfect template, so treat it as notes from my lab bench, not a certification-ready playbook.

Why I Sandbox Vibe Coding

Over the past few months I have leaned hard into high-DX workflows - TanStack Start, Bun, Hono, anything that lets me ship experiments quickly - but each time an agent autocompletes half a feature I remember that my ten years of coding and education were spent mostly on theory, not on casually cleaning up after rogue processes, so I try to treat these sessions as lab work rather than production practice. A viral Bolt session on X recently deleted someone's production database, so that story now lives rent free in my threat model as the cautionary tale for why rogue commands, insecure curl calls, or accidental rm -rf runs need guardrails. I'm not claiming to be a security oracle and I still run code outside a sandbox when I need speed, but the tension is obvious: I adore the convenience of having an LLM synthesise code on demand, yet I have no desire to turn my laptop into an unpaid SaaS platform for whoever can inject the cleverest prompt.

Container-First Experiments

My first mitigation attempt was to make Docker feel native instead of like a side quest. I prebuilt an image with Node.js, Bun, TanStack dependencies, and the usual dotfiles, mounted my working directory plus a couple of comfort items like .zshrc, and let the AI workload live entirely inside that container while my dev servers, browsers, and playlists stayed on macOS. Latency stayed low because the dependencies were already baked in, the filesystem isolation felt sane, and I realised that sandboxes do not have to murder developer experience even if I still break glass and run things directly on macOS when deadlines loom, at least when I'm not rushing.

Once that felt comfortable, I layered in small orchestration scripts that spin up the container when an agent session starts, forward ports, and shut everything down when it ends. The scripts are intentionally boring because boring code is auditable, and the fewer bespoke pieces I improvise, the easier it is to reason about what the agent can touch, which is more honest than pretending I've solved it. I also flirted with microVMs and Firecracker to get hardware-backed barriers, but the cold-boot penalties and additional plumbing made debugging feel like juggling kitchen knives, so Docker remains the best compromise while the microVM tooling waits in the wings for higher-risk sessions.

Security Knobs I'm Still Tuning

Running an agent in a container is not a solved problem, because I'm still piping arbitrary tool calls into my filesystem, and prompt-injection tactics roam free when an LLM begins installing dependencies, reading documentation through MCP (Model Context Protocol) bridges, or invoking bespoke "skills" that string together complex actions faster than I can scroll back through the buffer, especially when I'm juggling other windows. To keep the blast radius small I limit the container mounts (working tree plus minimal config), disable outbound networking for most sessions, and log every command so I can replay the exact sequence later in case a prompt injection convinces the agent to go hunting for SSH keys in ~. The next lever is smarter supervision, so I'm sketching an "LLM chaperone" that sits beside the agent and grades what it is about to do rather than blindly trusting the scrollback.

LLM Guard Concept

Instead of training a bespoke classifier, I'm planning to run a small local LLM whose entire job is to read each outbound command plus a short context window and assign a quick rating: low, medium, or high risk. The prompts include simple heuristics - rm -rf usage, network calls, writes outside the working tree, or package manager installs - and the model responds with a score plus a short reason. Commands flagged as high risk would pause for my approval, medium risk would be logged with a warning, and low risk would execute immediately. Because the chaperone is itself an LLM, I can fine tune or few-shot it with scenarios specific to my tooling, and I can run multiple passes when agents begin chaining skills or invoking MCP bridges. It is early days, but having a second model judging intent feels like the right balance between full automation and constant human babysitting.

Where I'm Heading Next

So far, containers isolate the heavy lifting, my main machine hosts the supporting cast, and I can nuke everything with one command if something feels off, but the setup is still manual, policy enforcement is basically "me watching the scrollback," and the command-rating model only exists in a notebook. Next up is prototyping that guard, experimenting with static scans for suspicious agent responses, and collecting telemetry on what files, ports, and packages the agents touch during longer sessions. No victory laps yet - this is absolutely a work in progress - but iteration is the only way I'm going to make AI coding feel safe without losing the fun, slightly chaotic energy that drew me in.

Keen to Swap Notes

If you're tinkering with similar setups, or if you have horror stories about agents running wild, let me know; I'm keen to compare notes and keep pushing this RCE-sized elephant back into its container.